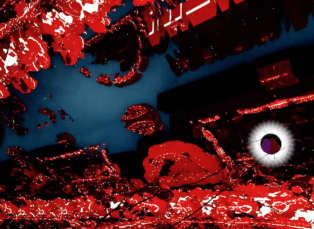

FOLD is an experimental VR exploration game where the environment is created by projections of three-dimensional fractals.

Other fields of experimentation are the non-linear and fragmented narrative with audio-only feedback, unique movement mechanics, and navigation by spatialised audio cues.

The project started in November 2017 as a part of Game Design and Production studies at the Aalto University, Helsinki. The team is:

Valtteri Bade − art, design, programming

Matias Harju − sound, music, design

Helena Sorva − story, design, programming

Ville Talonpoika − design, programming

FOLD was showcased at the klingt gut! sound symposium in Hamburg in June 2018.

Sonic challenges

From the sound design perspective there are several areas of interest and challenges. One is the use of audio as the key component in the narration. As the game world is abstract and not from this world, the human voices and carefully created sonic elements might help the player to feel empathy and interconnect with the story.

The game environment is actually "virtual reality" inside the virtual reality: all the forms and shapes are just 2D projections of mathematical functions in front of the player inside the 3D game world. This creates a fascinating starting point for sound design; how does it sound inside the fractals, and is the player inside them in the first place or just being "hallucinating"? Do the fractals have a sound? Can the player interact with the surroundings and will that produce sound? For the demo we decided that the different environments in the game should emit different kinds of music, but a practical problem aroused: where should the audio sources be located and how the musical elements should be divided between them?

A technical challenge is to use binaural spatialising to its full potential in order to enable the player to navigate in the abstract environment by using only her/his ears. Currently I'm using Wwise as the audio middleware. For the first demos I used Google Resonance spatialiser, then switched to Auro-Headphone by Auro-3D thanks to a free academic license from Aalto University. However, neither of the plugins produce very realistic-sounding results; sounds don't get externalised enough, at least with my ears and head anatomy. The best spatialiser I have tested so far is DearVR, but as they haven't released a Wwise plugin, I had to rebuild the project's audio structure so that the key audio elements requiring authentic spatialisation are played back using the Unity audio engine with the DearVR spatialiser, and all the interactive and generative audio is coming from the Wwise engine. Maybe not the most orthodox method, but so far the only solution I've come up with.

One interesting challenge with our project is of course the fact that our world is an infinite space. The binaural encoders rely partly on simulating early reflections of a room, but in our case the world should be anechoic. Or should it? Maybe the fractals reflect sound in a very special way, or maybe everything sounds like in a cathedral? There is still a lot to experiment.

Finally, still from the sound perspective, an interesting topic is to study procedural audio and music to enhance the narrative and overall experience. In the current version of the FOLD some interactive sound elements are already implemented, such as using synthesizers to produce "the sound of the fractals" by reading the distance data to the shapes and changing pitch, filtering and volume accordingly.

Images: Screenshots from the game

Video: Author and the team