In my master's thesis (2021) for Sound in New Media programme at Aalto University, I explored the narrative possibilites of audio augmented reality with six-degrees-of-freedom (6DoF) positional tracking. By analysing related experiences and literature, I proposed a list of narrative techniques characteristic to the medium. I also designed and constructed a prototype of a 6DoF AAR setup to get first-hand experience on the medium and test some of the narrative techniques in practice.

Audio augmented reality with six-degrees-of-freedom

AAR is gaining momentum with the current renaissance of augmented reality (AR) and mixed reality (MR) under the contemporary umbrella term extended reality (XR). Since the 1990s when the term AAR was first introduced, the medium has been researched extensively, and with new technologies and wearable devices, interesting AAR applications are getting available to the wider audiences. However, the narrative possibilities of AAR tend to be still an under-explored territory. This is especially true for AAR that utilises 6DoF positional tracking. In 6DoF AAR the user can freely move in a space while hearing spatially synchronised virtual sounds embedded in the environment.

6DoF

6DoF

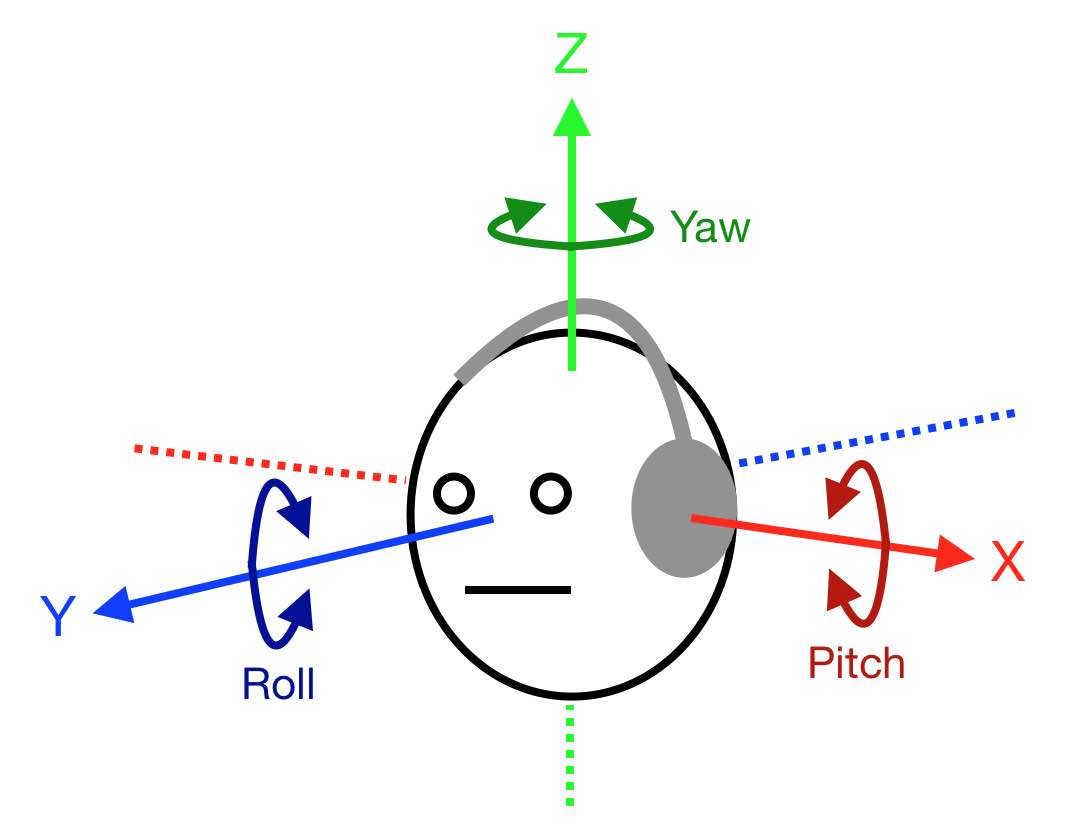

When an object can freely move in a three-dimensional space it is said to have six-degrees-of-freedom (6DoF): three

degrees refer to position coordinates in Euclidean space (x, y and z axes) while three others refer to orientation angles

(yaw, pitch and roll).

6DoF AAR carries many intriguing possibilities for storytelling and immersive experiences. For example, it can be used to convey an alternative narrative of a certain place through virtual sounds interplaying with the real world. The medium is also powerful in creating plausible illusions of something happening out of sight of the user, for instance, behind or inside an object. Unlike in traditional augmented reality (AR) with a visual display, in AAR the user's sight is not disrupted at all. This may be beneficial in places where situation awareness is important such as museums, shopping centres and other urban environments.

Narrative techniques of 6DoF AAR

In my thesis I concentrated on what is arguably characteristic of the medium: use of spatialised virtual audio, interplay between real and virtual, and interactivity based on user's location and movements. To have tools to analyse and create 6DoF AAR experiences I identified a set of narrative techniques characteristic to the medium:

SPATIAL POSITIONING TECHNIQUES:

Attachment

- virtual sound attached to a real-world object

Detachment/Acousmêtre

- sound has no perceivable counterpart in the real environment

Location affiliation

- soundscapes triggered by entering certain areas

Spatial offset

- e.g., sound of an airplane appears to be lagging behind

Within-reach

- sound inside the 'play area'

Out-of-reach

- sound outside of the 'play area'

Spatial asynchronisation

- virtual world coordinates relative to something else than the real environment

CONTEXTUAL TECHNIQUES

Match

- sound matches its real-world counterpart

Mismatch

- sound not matching its real-world counterpart

Additive enhancement

- additional sound or effect attached to a real-world sound

Masking

- real-world sound masked by a virtual sound

Manipulation

- real-world sound manipulated by replacement

EXAMPLES OF DYNAMIC TECHNIQUES

Change between attached and detached/acousmatic sound

- e.g., a portrait on the wall talks, then

the voice detaches from the frame and starts to move around in the room while still talking

Change between matched and mismatched sound

- e.g., person talking on TV with lipsync, then

the voice suddenly starts to vocalise the person' inner thoughts without lipsync

Zooming

- e.g., hearing a distant sound as it was close by, or zooming into the soundscape of a dollhouse

INPUT METHODS

Location

- triggering events based on the user's (head or other body part) position in the

2D or 3D space

Head orientation

- e.g., using ray tracing to estimate where the user is looking at

More detailed explanations, analysis, and practical examples of the invididual techniques can be found in the thesis linked above.

Prototype

In the prototype I crafted, the user was able to move in a room wearing open-back headphones and hear virtual audio objects superimposed three-dimensionally onto the physical real-world environment. According to the user's location and head-orientation, different narrative cues and interactions were applied. I ended up building the prototype with the following components:

UWB tracking system by Pozyx with an IMU-equipped tag attached to the headphones and six anchor units placed on the walls at different heights.

MacBook Pro computer with Unity game engine / executable build with DearVR spatialiser plugin

RME Fireface UCX audio interface

LD System MEI 1000 G2 IEM transmitter and belt-pack receiver

Beyerdynamic DT 990 PRO open-back headphones

Discussion

I believe 6DoF AAR has potential for unique narrative content, not possible to realise with any other medium. I'm also confident that by using off-the-shelf components and easily available authoring tools, anyone with knowledge on sound design, programming and storytelling can create gripping immersive 6DoF AAR experiences, although the technological approach should be carefully judged and chosen.

What comes to the tracking technology, another approach worth exploring, instead of UWB, could be image-based inside-out tracking where built-in cameras and machine vision deduce the device's location and orientation. Since there would be no need for fixed beacons and other installations, the approach could be extremely convenient for public spaces and delicate environments while enabling improved immersion without disruptive technology at sight.

To explore the topic further, it would be interesting to realise a large-scale narrative experience using some of the core techniques and strengths of the medium. For instance, 6DoF AAR could be used to challenge the dominant narratives of historical venues and events. Another direction could be concentrating on the multimodality of the experience from the user's perspective; for instance, how the perception and interpretation of the auditory content is influenced by the sensory stimuli of the real-world. Also, the problem of acoustic transparency and augmentation of auditory reality would be worth researching more from the storyteller's point of view: since hearing is omnidirectional, and environments are full of distracting sounds, it seems difficult to frame the user's attention in hear-through AAR. A fundamental question might be, how can the user get immersed into a storyworld while constantly being reminded of the real world?

Also, someone should come up with a bit more captivating name for 6DoF AAR!

Credits and acknowledgements

Advisors: Prof. Sebastian Schlecht, Marko Tandefelt

Narrative consultation: Emilia Lehtinen

Technical help: Matti Niinimäki, John Lee

AAR consultation: Hannes Gamper, Steffen Armbruster

Additional acknowledgements: Kaisa Osola, Eva Havo, friends and other test users

Woman's voice: Michelle Falanga

Robert's voice: Preston Ellis

Anselmo's voice: Rey Alvarez