Freud, la dernière hypnose (´Freud's last hypnosis´) is an experimental 360° film and research project by French director Marie-Laure Cazin. By interpreting EEG signals, the film changes some of its musical and visual elements in real-time according to the spectator's emotions, namely valence and arousal. The film was premiered at Laval Virtual in March 2019, and it is still being developed and showcased around. Currently, a new EEG interaction system is being implemented.

I have worked in the project as the sound editor, sound designer, composer and programmer; first through an internship from Aalto University between January−July 2018, and after that as a freelancer over several production periods. My main task at the moment is arranging and programming the adaptive music to make it meaningfully reflect the measured emotions. I am also doing most of the overall coding for the project.

In this post, I will share my experiences on the project during the initial production period in 2018. I will also explain the workflows I've been using during the post-production. I'm going to be quite detailed, so I have divided the post into several chapters. You'll find the navigation links at the end of this first page.

Neuro-interactive 360 film

In 2014 Marie-Laure Cazin realised an interactive film project Cinéma émotif where the storyline of her film Madamoiselle Paradis was changing according to spectators' emotions. A couple of selected audience members were wearing EEG headsets, and their valence (positive−negative) and excitement levels (high−low) were measured as they were watching the film. This data was then used to change the course of the film in real-time together with some audio effects on the soundtrack.

Freud is a similar project, however this time the spectator will be inside a 360° film called Freud − La dernière hypnose . The film takes the audience into Sigmund Freud's last hypnosis session with his young patient Karl. The story is based on Jean-Paul Sartre's text Scénario Freud .

Unlike in the Cinéma émotif, now the emotions will not change the storyline, but they will affect music and some visual elements in certain parts of the film. The spectator is placed in either Freud's or Karl's subjective (first-person) position, although there are cuts to objective (third-person) vantage points, too.

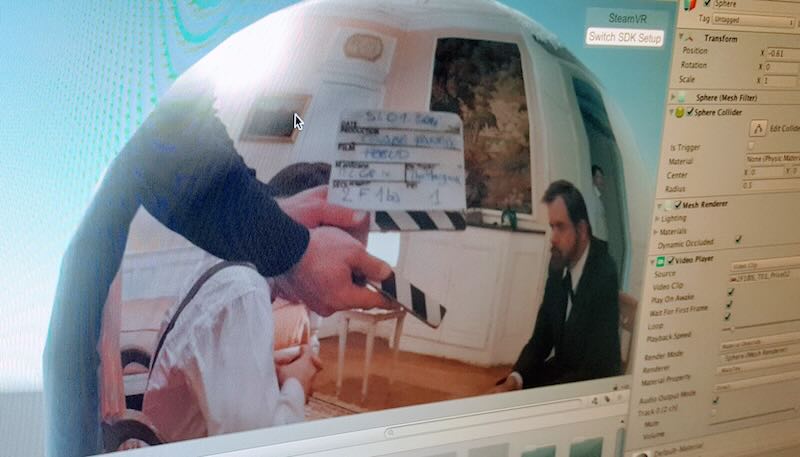

The core components of Freud are two 10−11 minute cinematic 360-degree (spherical) monoscopic videos with actors and dialogue. These linear film sequences are complemented with spatialised sound design and interactive music. The videos run on the Unity game engine which receives control parameters from the EEG system. Audio in 2nd order ambisonics and head-locked stereo will run separately in sync from Wwise sound engine. The primary VR platform is HTC Vive, but thanks to the OpenXR standard, it runs on other devices, too.

The film is a joint production by Le Crabe Fantôme production company based in Nantes, DVgroup VR production company based in Paris, the École supérieure d'art et de design TALM (ESAD-TALM), Le Laboratoire des Sciences du Numérique (LS2N) of the University of Nantes / Polytech Nantes, RFI Ouest Industries Creatives , and University of Bordeaux.

The project has been a part of Marie-Laure's post-doctoral research at the Baltic Film, Media, Arts and Communication School of Tallinn University, and the LS2N in Nantes.

My physical location during the internship in 2018 was in Le Mans, at the ESAD-TALM art school. I worked in a close relationship with the other intern Christophe Rey whose main job was in video editing, video stitching and 3D modelling. From time to time we travelled to Nantes to meet with the students at the University of Nantes working on the EEG algorithms and Unity under the supervision by professor Toinon Vigier.

The topics of hypnosis, psychoanalysis, self-knowledge, and psychology in general are extremely interesting in the context of VR, and exploring their relationships in terms of sound design would have been an intriguing challenge to me. Unfortunately it turned out that due to the massive workload with practical realisation of the sounds and music I did not have any extra time to research or explore around the topic.

Structure of the experience

Setting up

The Freud experience starts with the spectator sitting down on a swivel chair. An EEG headset (by Emotiv) is installed on the spectator's head after which an HTC Vive and headphones are put on. Software related to the EEG measurements is already running, and the first scene of the Freud Unity project is ready to start.

Introduction #1

A 360 image of a web of neural connections surround the viewer in all directions. Short, high-pitched "sounds of neurones" whistle around. A steady synth pad grounds the soundscape in intention to create a relaxed feeling. After a few seconds a female voice welcomes the spectator to the experience and explains about the EEG measurements and the calibration that will take place next.

AUDIO/MUSIC WORKFLOW IN A NUTSHELL:

1. VO recorded into Pro Tools

2. Cleaned with iZotope RX, edited, slightly EQ'd and compressed, exported to Wwise

3. Neurone sounds played with theremin, recorded into Pro Tools, edited, exported to Wwise

4. Played back from a random container with randomised pitch and randomised positions in 3D space

5. Synthpad played with Pro Tools' Xpand!2, two separate tracks exported to Wwise

6. Spatialised in 3D

7. Routed through 2nd order Abisonic bus in Wwise with Auro-Headphones spatialiser

Calibration

While the user is wearing an EEG cap and VR headset, a series of everyday sounds from the IADS-2 (International Affective Digitized Sounds) database is played through the headphones. The sounds are supposed to trigger different emotions in the user, and the EEG sensory data is then recorded and interpreted by the system to estimate the user's valence and arousal. The EEG training and estimation system is developed by Joseph Larralde and his team at the University of Bordeaux.

Introduction #2

Similar to the first introduction, however this time the voice explains more about when and how the EEG measurements are used during the experience. She also welcomes the spectator to the 1938 Vienna where the frame-story (the prologue) is set.

Opening credits

Freud, la dernière hypnose starts with credits appearing on blackness. Music with theremin, piano and strings is playing (check the video in the beginning).

AUDIO/MUSIC WORKFLOW IN A NUTSHELL:

1. Piano and theremin theme ("Freud's theme") played with Pro Tools' MiniGrand and a real theremin

2. String theme ("Karl's theme") played in Pro Tools with Spitfire Chamber Strings sample library

3. Exported to Wwise as a ready-made mix, using a headlocked stereo-bus

Prologue

This is an audio-only prologue scene where Sigmund Freud reflects his experiences with hypnosis in the early days of his career. The spectator can hear street sounds coming from a window, a clock ticking in the room, Freud lighting a cigar, walking around on a wooden floor and talking. Visually there's only a computer-generated trace of smoke following Freud's movements.

AUDIO/MUSIC WORKFLOW IN A NUTSHELL:

1. Monologue recorded on location in Nantes with a boom and an Ambisonic microphone

2. After many tests I decide to use only the boom mic

3. Foleys for footsteps and clothes recorded in Le Mans

4. Street sounds constructed from my own field recordings

5. Edited and spatialised in Reaper using IEM room encoder and 2nd order Ambisonics

6. Played back with Wwise in Unity

7. Not being happy about the result I later reconstructed the scene in Unity using animated audio objects and dearVR spatialiser with a much more authentic 3D effect

Choice

The spectator finds themself in a late 19th century room with paintings on the wall, fireplace, bed, books on a shelf... We're now inside a 360 film. There are two characters in the room: Karl von Schroeh, the patient, is sitting and making dyskinetic movements with his hands. Freud is standing, now younger and in the early years of his career.

The spectator is encouraged to make a choice between the two characters by gazing them for a while. When gazing at Karl the spectator hears his hand foleys and fragmented breathing. When gazing at Freud the spectator hears him making little noises with his mouth, coughs, sighs, etc. A visual timer indicates when the selection will be done. In the background we hear a short loop of music with theremin and piano.

AUDIO/MUSIC WORKFLOW IN A NUTSHELL:

1. Foleys and sounds edited from the wild location recordings, synced to the picture in Reaper

2. Foleys integrated and spatialised as game objects using Unitys' own audio engine

3. Music created in Pro Tools with theremin and MiniGrand software synth, exported to Wwise

The 360 films: Freud and Karl versions

If the spectator chooses Freud, an 11 minute long 360-film about Freud's last hypnosis session with Karl starts. The story is told from Freud's perspective, although the camera keeps jumping between first-person and third-person views. If the spectator chooses Karl the same story is shown from his perspective.

There's no music in the films until about halfway when both Freud and Karl start hallucinating due to Freud's hypnosis. That is when the interactive part starts: the valence level of the spectator affects the arrangement and feel of the music: when the measured and calculated valence level goes towards positive side more instrument layers and rhythmic content is added; when the level gets negative a re-harmonising effect and low-tones are used.

There will also be a graphical meter inserted somewhere inside the VR world providing the spectator with visual feedback of the valence level.

AUDIO/MUSIC WORKFLOW IN A NUTSHELL:

1. Dialogue recorded on location with wireless lavaliers

2. SoundField Ambisonic microphone running simultaneously to capture the room

3. Dialogue edited and cleaned using Reaper and iZotope RX

4. Foleys recorded and edited in Reaper

5. Sound effects created with theremin and other sound sources with several plugins in Reaper

6. Spatialisation done and synced with the 360-video using the FB360 toolkit

7. After several picture re-edits syncing audio with Vordio

8. Ambisonic 2nd order files and headlocked stereo files exported to Wwise

9. Videos running in Unity video player, synced with audio

10. Music created in Pro Tools using theremin and software synths and sample libraries

11. Sound effects created with theremin and other sound sources with several plugins in Reaper

12. Stems exported to Wwise for building the interactive music

Choice (again)

After one of the films has finished the spectator is taken back to the choice sequence, where she can choose to watch the other version (or the same again) or exit to the epilogue. When gazing at the exit sign the spectator hears a distant sound of soldiers marching.

Epilogue

In the epilogue the spectator is taken back to the old Freud walking and talking in his 1938 Vienna apartment. This time we hear German soldiers marching pass the window, reminding of the due exile for this Jewish neurologist and his family.

End credits

At the time of writing this I don't know how the end credits will be realised, but there was an idea to give the spectator a visual report of their emotional data recorded during the whole experience. In addition to that the end credit music will change according to the same data and thus giving an audible feedback of the experienced emotions. The interactive music is also spatialised so that each instrument group is positioned around the spectator in a 3D space.

Next: